Hi all

I use infinispan for embedded cache and criteria query in cache. It is works great!

Unfortunately, it seems like there is a memory leak somewhere in the job submission logic. We are getting this error: 2020-02-18 10:22:10,020 INFO org.apache.flink.runtime.executiongraph.ExecutionGraph - OPERATORNAME switched from.

I have two questions:

Java Metaspace Dump

After investigation, I think there is no memory leak in the ProxyFactory or PropertyInfoRegister. All these objects can be gc. The only thing that caused the memory leak is the proxied classes in the class loader, ModelMapper will create classes each time you call the addMapping. However, if an application has a memory leak, increasing heap size is no help. The leak in such a situation needs to be fixed. ‘Metaspace is too small’ problem. Metaspace is a memory area to store class metadata. Starting from Java SE 8, metaspace has not been part of heap, even though the lack of available space in metaspace may cause GC.

1) I start infinispan in my application and call embeddedCacheManager.stop() at application destroy.

I run my application in oracle weblogic 12.1.3 server. And after redeploy my application I see metaspace memory leak.

I have checked heap dump in memory analizer.

The cause in thread local variable threadCounterHashCode in org.infinispan.commons.util.concurrent.jdk8backported.EquivalentConcurrentHashMapV8$CounterHashCode class

After undeploy aplication there is a thread which keep thread local reference to threadCounterHashCode, and threadCounterHashCode keep link to classloader and that's why classloader cannot be removed from metaspace memory.

I cannot control this thread.

How should I clean memory in this case? Is it possible?

2)Cache initialization in one thread takes near 50 seconds for 40000 entities.

I would like to speed up this process. How can I do it?

Detect memory leaks early and prevent OutOfmemoryErrors using Java Flight Recordings.

Detecting a slow memory leak can be hard. A typical symptom is that the application becomes slower after running for a long time due to frequent garbage collections. Eventually, OutOfmemoryErrors may be seen. However, memory leaks can be detected early, even before a problem occurs using Java Flight Recordings.

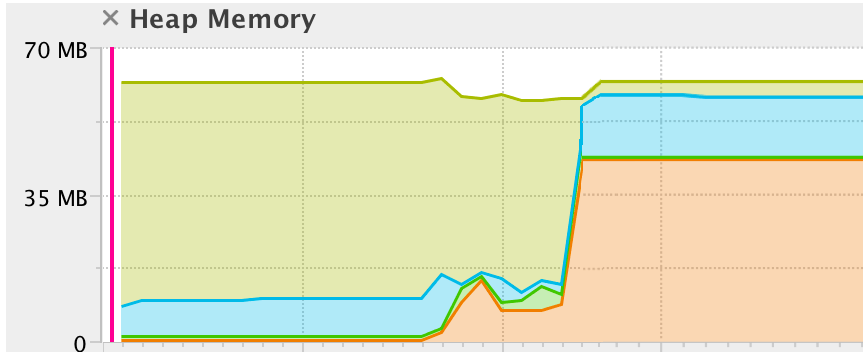

Watch if the live set of your application is increasing over time. The live set is the amount of Java heap that is used after an old collection (all objects that are not live have been garbage collected). The live set can be inspected in many ways: run with the -verbosegc option, or connect to the JVM using the JMC JMX Console and look at com.sun.management.GarbageCollectorAggregator MBean. However, another easy approach is to take a flight recording.

Java Metaspace Memory Leak Detector

Enable Heap Statistics when you start your recording, which triggers an old collection at the start and at the end of the recording. This may cause a slight latency in the application. However, Heap Statistics generates accurate live set information. If you suspect a rather quick memory leak, then take a profiling recording that runs over, for example, an hour. Click the Memory tab and select the Garbage Collections tab to inspect the first and the last old collections, as shown in Figure 3-1.

Figure 3-1 Debug Memory Leaks - Garbage Collection Tab

Java Metaspace Memory Leak Detection

Description of 'Figure 3-1 Debug Memory Leaks - Garbage Collection Tab'

Select the first old collection, as shown in Figure 3-1, to look at the heap data and heap usage after GC. In this recording, it is 34.10 MB. Now, look at the same data from the last old collection in the list, and see if the live set has grown. Before taking the recording, you must allow the application to start and reach a stable state.

Java Metaspace Gc

If the leak is slow, you can take a shorter 5-minute recording. Then, take another recording, for example 24 hours later (depending on how fast you suspect the memory leak to be). Obviously, your live set may go up and down, but if you see a steady increase over time, then you could have a memory leak.

Comments are closed.